- Elicited zero-shot manipulation of articulated objects of various masses from off-the-shelf VLMs across 5 tasks

- Composed forceful skills in two-step process: spatial reasoning about an image labeled with the robot coordinate frame and physical reasoning about object mass, torques, and object-surface interactions.

- Observed jailbroken behaviors emergent from this reasoning process, addressed in a separate RSS workshop paper.

- Presented poster at Reasoning for Robust Robot Manipulation in the Open World Workshop @ Robotics: Systems and Science (RSS) 2025

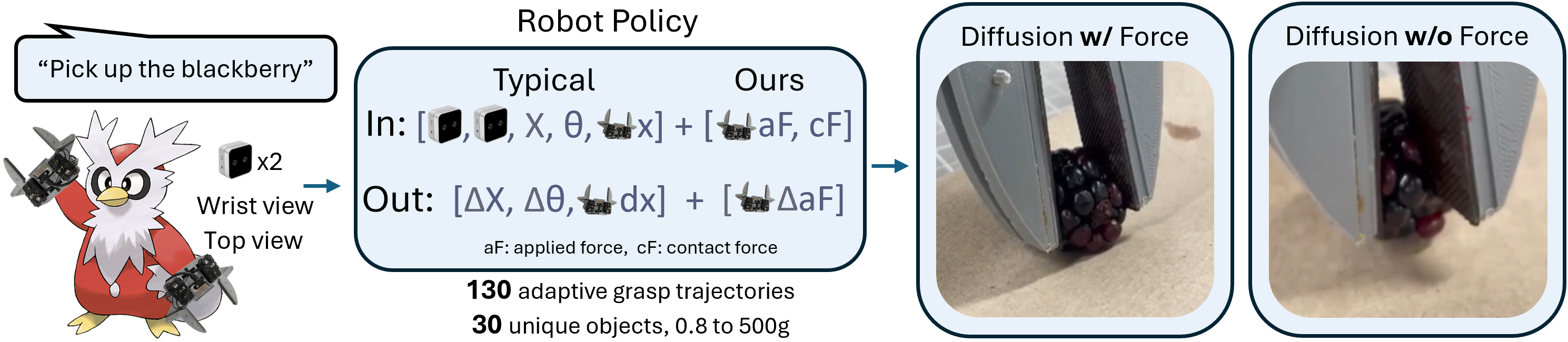

- Showed that grasping diffusion policies conditioned with contact force feedback improved grasp success and minimized object damage compared to policies without force feedback.

- Force-conditioned diffusion policies generalized better to novel delicate fruits, vegetables, and objects.

- Presented poster at Mastering Robot Manipulation in a World of Abundant Data Workshop @ Conference on Robot Learning (CoRL) 2024

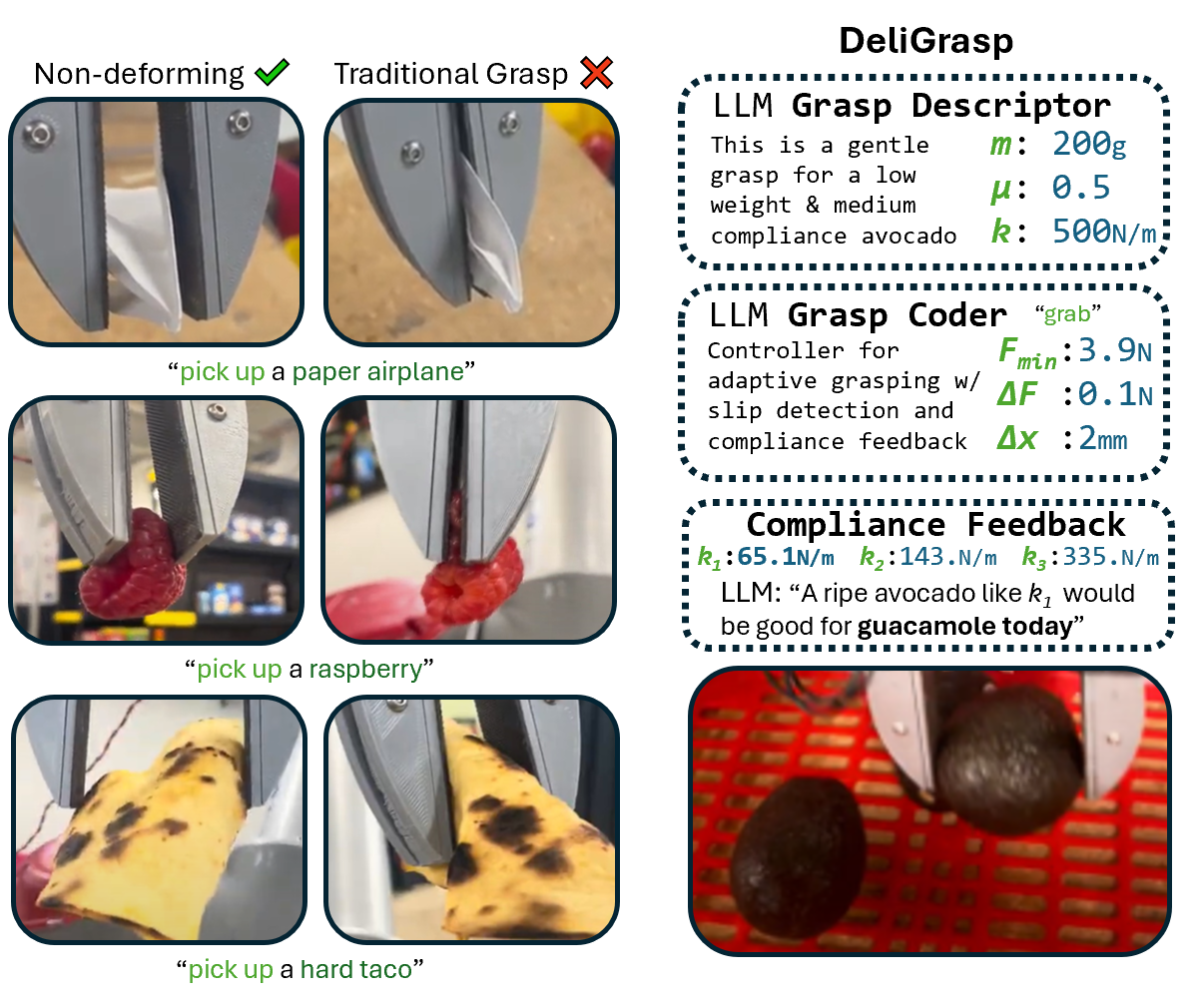

- Demonstrated state-of-the-art single-controller grasping success on delicate objects such as raspberries and paper origami

- Leveraged world-model estimation of object mass, friction, and compliance to simplify and inform low-level grasp optimization

- Fine-tuned language models on object properties improved mass estimation by 10% and grasp success on aberrant objects.

- Presented poster at Conference on Robot Learning (CoRL) 2024

Wearable Rings with Deep Gesture Recognition, 2022 Summer Research Internship at Snap Inc.

- Developed lightweight set of two wearable rings enabling real-time detection of five distinct finger gestures with 95% accuracy

- Investigated feature learning from novel MEMS magnetic field sensors and reconstructed hand pose kinematics with a modified FABRIK solver

- Time-series transformer model generalized to varied hand morphologies, sizes, and gesture styles.

- Developed, in team of four students, novel assistive walking device mechanisms: modular handles, eddy current brake powered resistive wheels, and variable, controllable weight support

- Utilized FEA and dynamics analysis in SolidWorks, Inventor CAM, waterjetting, CNC milling, laser cutting, 3DP, and 20+ prototype iterations over 2 semesters to create product

- Leverages COTS push down rollator

- For 2021-2022 Columbia Mechanical Engineering Senior Design

- Team: Daniel Kwon, Delphine Lepeintre, William Xie, Eric Xue

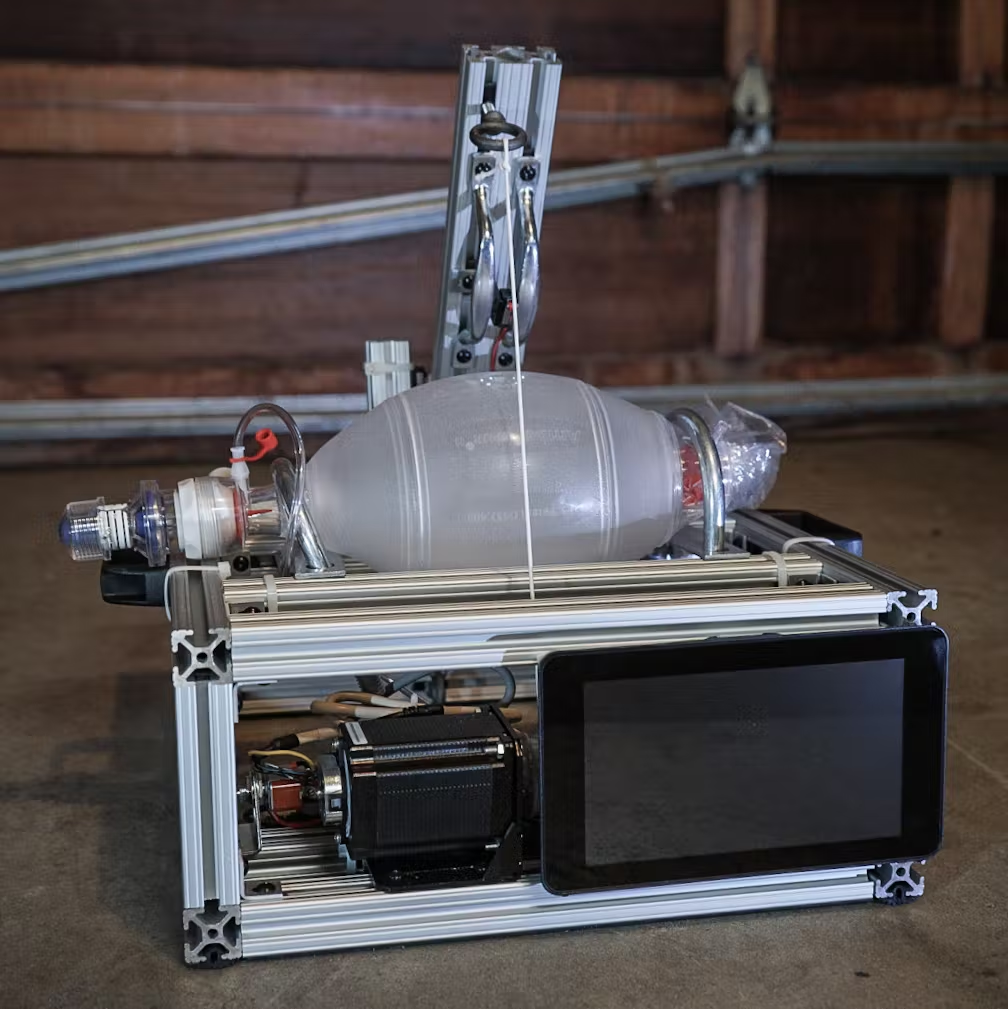

- Developed, in team of five students, open-source, fully COTS solution; purchasable with under $900 and able to be assembled within 2 hours

- Awarded $8000 to engineer rapid response, emergency ventilator for COVID-19 patients out of 80 proposals

- Implemented real-time performant control system and user interface in Python, PyQt with a Raspberry Pi 3

- Team: Noah Silverstein, Delphine Lepeintre, William Xie, David Kao, Eric Xue, Neil Nie, Jonathan Sanabria

Collaborations with Mimi Park

Dawning: dust, seeds, Coplees (2022, repo)

- Collaborated with sculptor Mimi Park for gallery show to create bespoke kinetic sculptures

- Co-ideated, designed, and crafted autonomous cars with Arduinos, DC motors, feathers, pipe cleaner, chimes, and steel wireframe

- Shown at Lubov in Spring 2022, as reviewed in Hyperallergic

"Gorbachev" (2021, repo)

- Developed walking, organically shaped quadrupedal robot resembling the Venezuelan Poodle Moth

- CAD Model

Gallery View

Making of Gorbachev